Selected Publications

The list below shows my recent publications and is being updated continually. The publications can be downloaded by following the links below.

Filter by type:

Sort by year:

SoD-Toolkit: A Toolkit for Interactively Prototyping and Developing Multi-Sensor, Multi-Device Environments

Conference Paper ACM International Conference on Interactive Tabletops & Surfaces (ITS), November 2015, Pages 171-180

Abstract

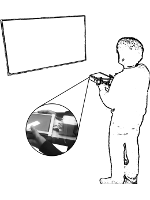

As ubiquitous environments become increasingly commonplace with newer sensors and forms of computing devices (e.g. wearables, digital tabletops), researchers have continued to design and implement novel interaction possibilities. However, as the number of sensors and devices continues to rise, researchers still face numerous instrumentation, implementation and cost barriers before being able to take advantage of the additional capabilities. In this paper, we present the SoD-Toolkit -- a toolkit that facilitates the exploration and development of multi-device interactions, applications and ubiquitous environments by using combinations of low-cost sensors to provide spatial-awareness. The toolkit offers three main features. (1) A "plug and play" architecture for seamless multi-sensor integration, allowing for novel explorations and ad-hoc setups of ubiquitous environments. (2) Client libraries that integrate natively with several major device and UI platforms. (3) Unique tools that allow designers to prototype interactions and ubiquitous environments without a need for people, sensors, rooms or devices. We demonstrate and reflect on real-world case-studies from industry-based collaborations that influenced the design of our toolkit, as well as discuss advantages and limitations of our toolkit.

A Unified Model for Mapping Gestures to Tracking Sensors in Multi-Surface Environments

Workshop Paper Workshop on Supporting "Local Remote" Collaboration, ACM Computer-Supported Cooperative Work (CSCW), March 2015

Abstract

Multi-surface environments (MSEs) incorporate a wide variety of heterogeneous devices such as smart phones, tablets, tabletops and large displays into a single interactive environment. To enable natural and seamless interaction between these devices, designers and researchers have proposed a variety of gestures to perform different tasks (such as picking, dropping, flicking, etc.) in MSEs. These gestures often require using the spatial layout of the environment, and the spatial information of devices and users within the environment. This means instrumenting the environment or the devices with tracking technologies. However, implementations of these gestures have been subjective to the designers’ choice of sensors and technologies, which typically results in the same gesture being implemented in a number of different ways. The lack of a unified model for mapping gestures to tracking sensors motivates our research. We intend to perform a study to elicit gestures in MSEs, to suggest tracking sensors that would enable these gestures.

From Room Instrumentation to Device Instrumentation: Assessing an Inertial Measurement Unit for Spatial Awareness

Workshop Paper Workshop on Collaboration Meets Interactive Surfaces, ACM Interactive Tabletops and Surfaces (ITS), November 2014

Abstract

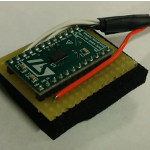

Current implementations of spatially-aware multi-surface environments rely heavily on instrumenting the room with different tracking technologies (e.g. Microsoft Kinect, Vicon Cameras). Prior research, however, has shown that real-world deployment using such approaches leads to feasibility issues and users being uncomfortable with the technology in the environment. In this work, we attempt to address these issues by examining the use of a dedicated inertial measurement unit (IMU) in a MSE. We performed a limited user study and present our results that suggest measurements provided by an IMU do not provide value over sensor fusion techniques for spatially-aware MSE’s.

Investigating Inertial Measurement Units for Spatial Awareness in Multi-Surface Environments

Extended Abstract ACM Symposium on Spatial User Interaction (SUI), October 2014, Pages 152-152

Abstract

In this work, we present an initial user study that explores the use of a dedicated inertial measurement unit (IMU) to achieve spatial awareness in Multi-surface Environments (MSE's). Our initial results suggest that measurements provided by an IMU may not provide value over sensor fusion techniques for spatially-aware MSE's, but warrant further exploration.

Using Multiple Kinects to Build Larger Multi-Surface Environments

Workshop Paper Workshop on Collaboration Meets Interactive Surfaces, ACM Interactive Tabletops and Surfaces (ITS), October 2013

Abstract

Multi-surface environments integrate a wide variety of different devices such as mobile phones, tablets, digital tabletops and large wall displays into a single interactive environment. The interactions in these environments are extremely diverse and should utilize the spatial layout of the room, capabilities of the device, or both. For practical multi-surface environments, using low-cost instrumentation is essential to reduce entry barriers. When building multi-surface environments and interactions based on lower-end tracking systems (such as the Microsoft Kinect) as opposed to higher-end tracking systems (such as the Vicon Motion Tracking systems), both developer and hardware issues emerge. Issues such as time and ease to build a multi-surface environment, limited range of lowcost tracking systems, are reasons we created MSE-API. In this paper, we present MSE-API, its flexibility with low-cost tracking systems, and industry-based usage scenarios.